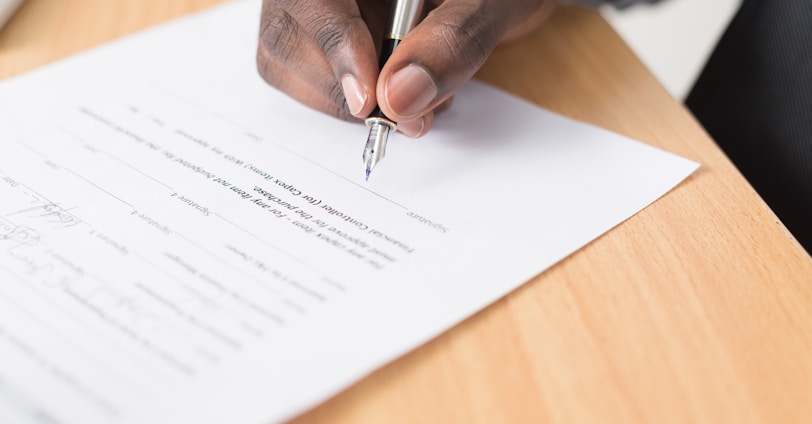

Fast Document Generation

Get instant access to our innovative tool for creating legal, business, and personal documents in a fast and efficient way.

Our Work

Projects

Explore our portfolio of successful document generation projects. We have helped clients from various industries streamline their paperwork and improve efficiency.

Client Testimonials

Hear what our clients have to say about our document generation services. Discover how we have transformed their businesses with our fast and reliable solutions.

About Us

We are a team of experienced professionals dedicated to simplifying the document generation process. Our goal is to provide simple and effective solutions for all your document needs.

Contacts

info@rsatechnologies.com